Despite having a passion for technology, I generally upgrade my phone every 6 years or so. One of the features I have been most excited about this time around is the LIDAR sensor on the iPhone 17 Pro. After some initial success using Niantic’s free Scanniverse app to help make furniture decisions while apartment hunting, I decided to have a go at using it to capture some information to feed OpenStreetMap, my favourite open-data obsession.

Scanning

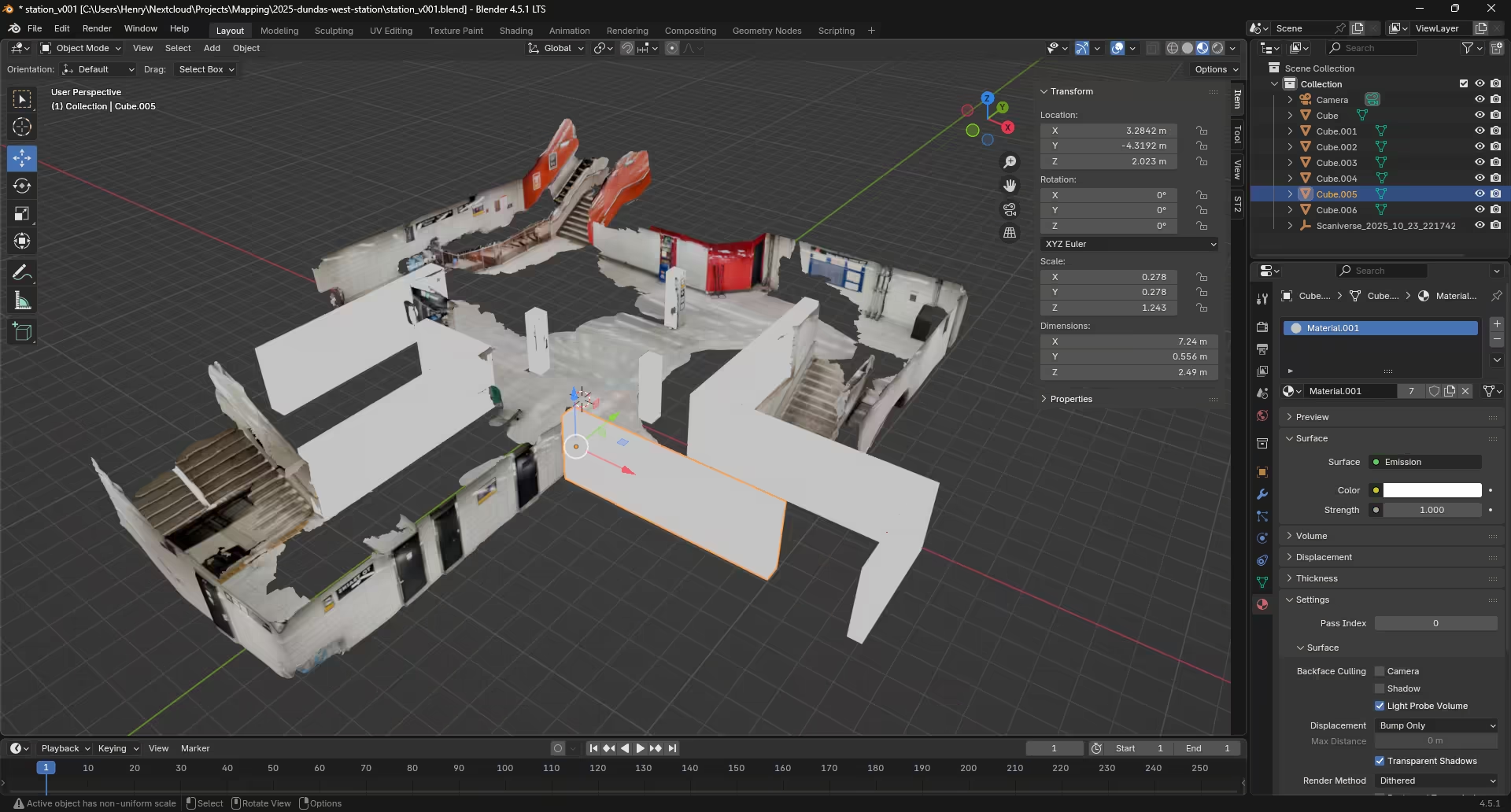

The scanning part is easy! Last week after side project night1 at 1RG, I fired up Scanniverse as I entered the subway and went to town walking around the concourse level with my phone trying to avoid anybody who might walk through the shot. The model is full of holes and far from perfect but the goal here isn’t a digi-double, I just need the dimensions of the structure. This part took about 5 minutes which included a few retries.

I’ve used Meshroom in the past for scene reconstruction and 3D camera tracking, and Polycam for quickly grabbing space dimensions and floor plans on a friend’s device. Meshroom is great and still my preferred choice for more advanced photogrammetry pipelines, but the fact that I can get dimensionally accurate models of spaces in 5 minutes by waving my phone around is kind of amazing. Scanniverse fits in this perfect spot of super basic and good enough output for 3D meshes, but it can also generate gaussian splats which are super cool… and perhaps a topic for the future.

After bringing my scan into Blender as a USDZ file, I deleted the poorly captured ceiling so none of the floor space was obscured, filled in some of the missing walls dimensions to help with tracing later, and rendered it out in with an orthographic camera. Because the scans are dimensionally accurate I also took note of the height (2.5m) to add as a property to the rooms later in OSM.

Mapping

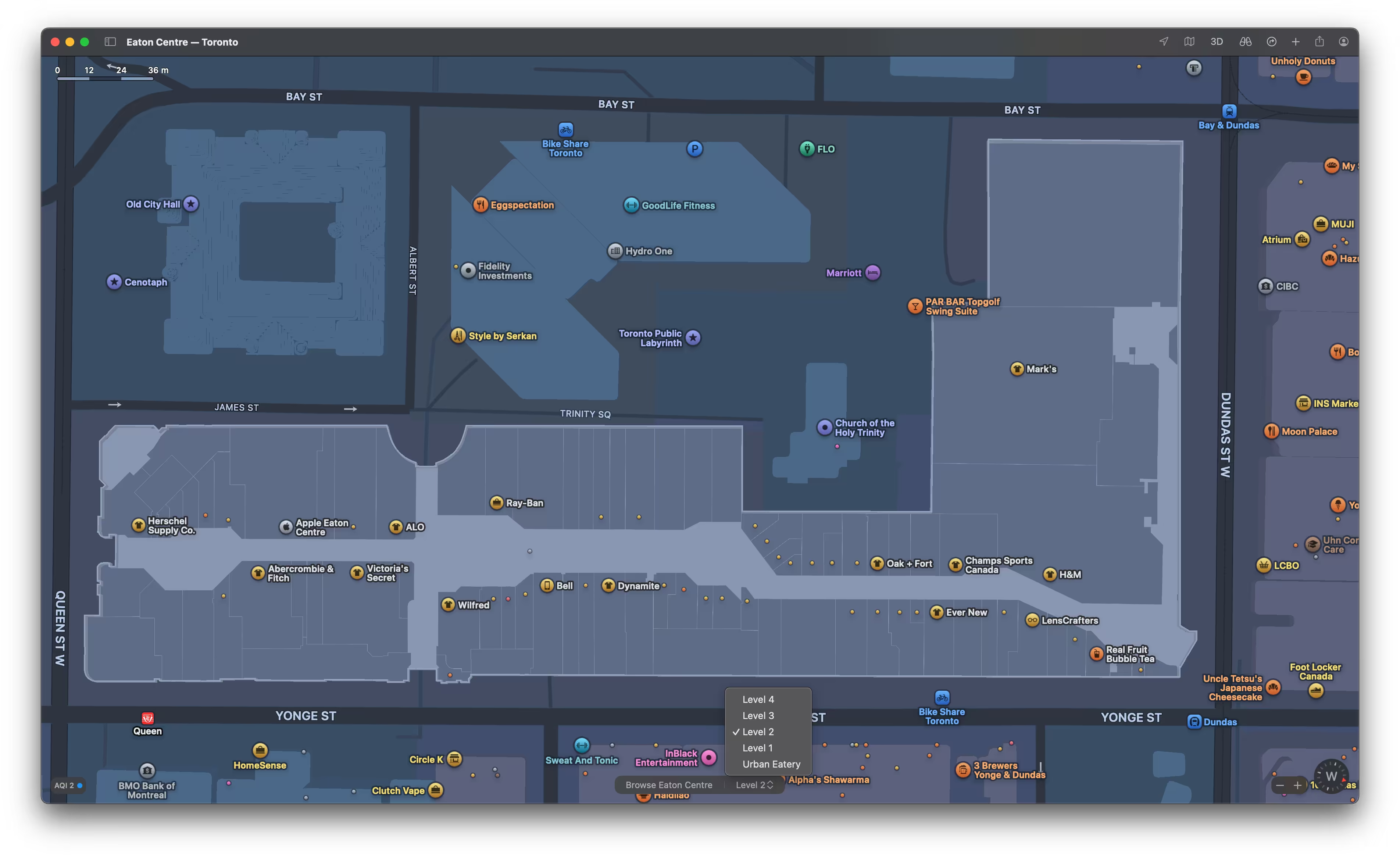

Interior mapping has existed for a while in Google and Apple Maps, you’ll sometimes see interior maps available in airports or shopping centres. If you’ve never seen them before, here’s an example of Toronto’s Eaton Centre in Apple Maps.

On the OSM side of things, there are far fewer examples of great indoor maps. Today the user experience for surveying indoor spaces and bringing that data into editors isn’t nearly as polished as editing from satellite imagery is, but here’s how I went about it!

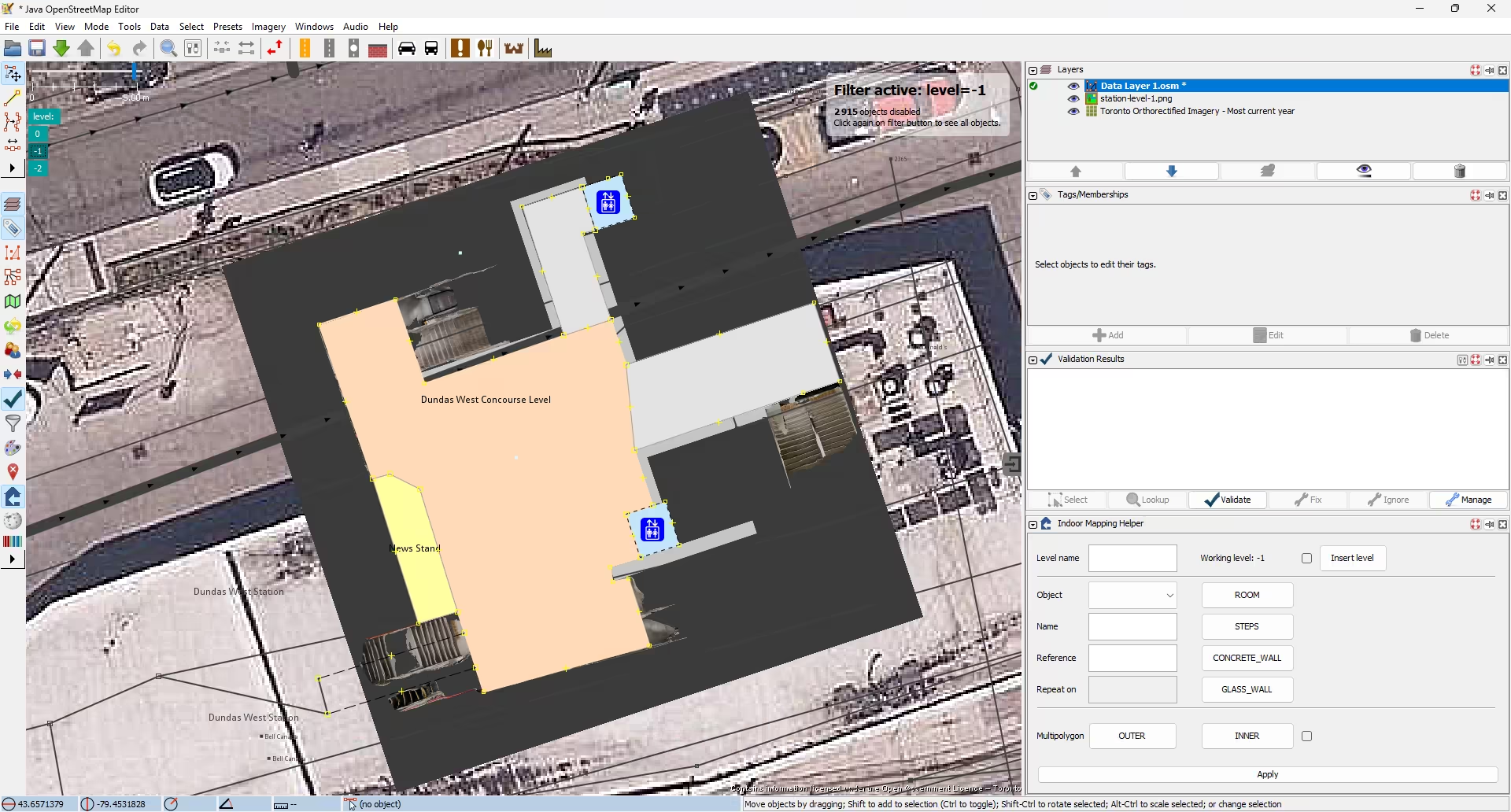

First I had to get my rendered floor plan into an OSM editor to use as a reference. Normally I use the iD editor built into the OSM website but as of writing it doesn’t support importing and aligning arbitrary images2. JOSM supports this with the PicLayer plugin and while I generally find JOSM pretty unintuitive to use, I was able to tough it out here. After aligning the image to the satellite imagery as best I could based on my knowledge of the space, I added shapes for the rooms, hallways, and elevators, and pushed up my changes for continued editing in iD.

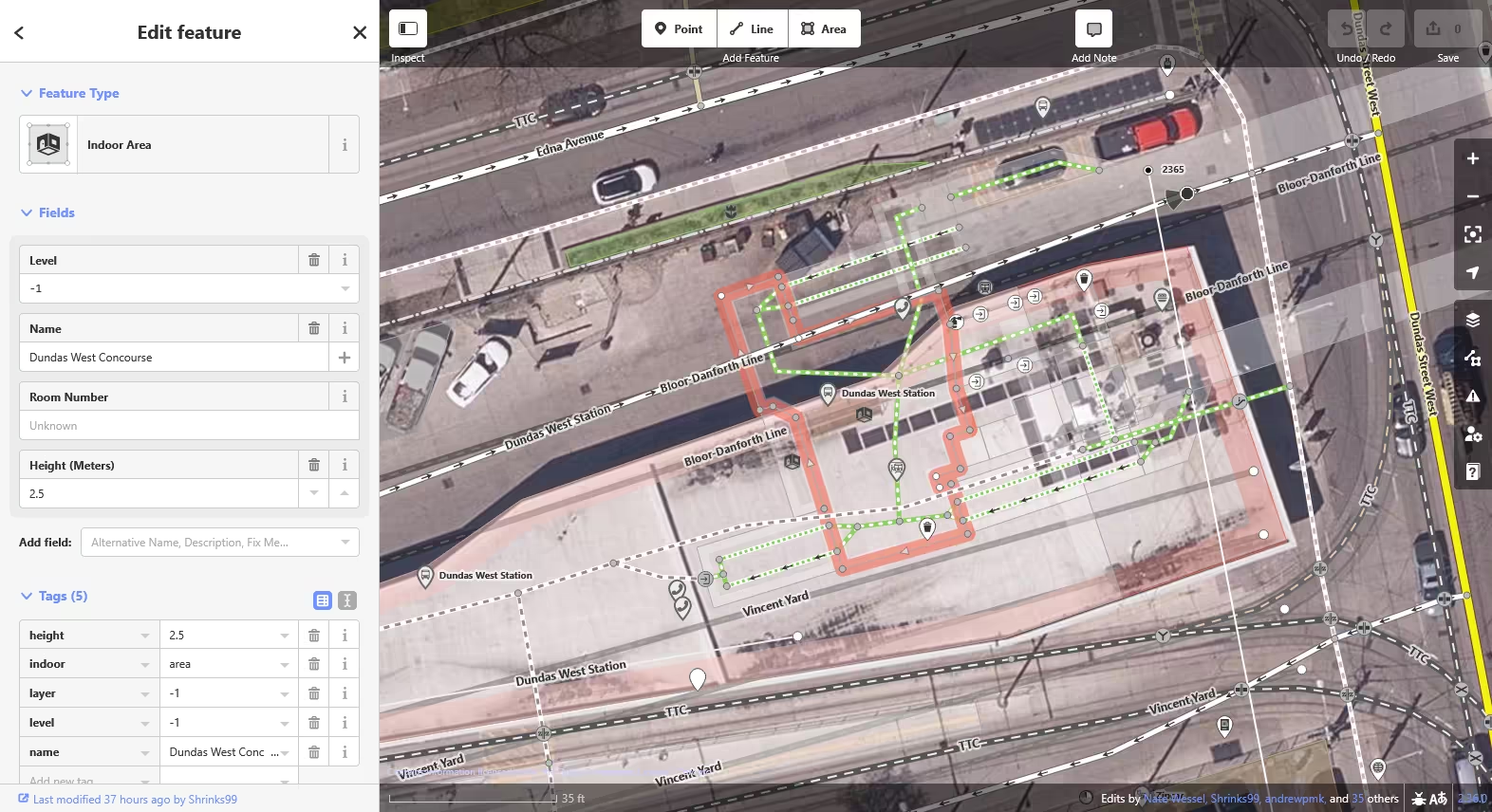

If you’re unfamiliar with iD, this view probably doesn’t look any more intuitive than the last one… But I’ve been using this editor for more than 10 years now so I guess I like it. Here I added the stairwell rooms and paths for routing and adjusted the tracks and platforms based on the newly landmarked concourse level. One of the best parts of iD is the iD tagging schema where developers have done a great job of formalizing OSM’s folksonomy of tags into a list of suggested fields for users. If you provide people with clear form fields to fill out, you’ll get better data!

Conclusion

If you want to see this work rendered, you can check it out using OpenLevelUp!

Dundas West is nicer and more accurate now, but will anybody actually use this data? As of today, most popular OpenStreetMap clients don’t support indoor tagging and the ones that do have been built with pretty much the sole purpose of displaying indoor map data for editors. I think this is mostly a chicken and egg problem. While the simple indoor tagging spec is reasonably complete and implemented both in iD and JOSM (with plugins), iD doesn’t have a great workflow for getting survey data into the program and doesn’t provide an interface for filtering data by level values. You may also note that while rendering out to an image worked okay in the end, I lost the model’s accurate scale information along the way.

All of these are solvable problems and it’s important to remember that this is all open source software — most of it is developed on either a volunteer or grant-funded basis. My hope would be that the authoring tools can continue to improve leading to better data availability which should push more popular clients like CoMaps to implement indoor rendering and keep pushing the navigation experience forward.

As I think I’ve been able to show here though, the question of where to obtain good ground-truth data for mapping indoor spaces is pretty much answered, and it fits in your pocket!

Footnotes

-

If you’re the type of person that thinks this stuff is neat and in Toronto you should come hang! Check out the 1RG calendar on Luma. ↩

-

If you must do this in iD today, you can use Map Warper to align your image with a reference map. I personally find relying upon a 3rd party map alignment service to be a less-than-great user experience, but hey it’s there if you need it! ↩